Table of contents

- Why Use Ingress Objects?

- Why Services Are Not the Best Fit for External Access?

- get pods

- verify ingress add-on enable pr may

- Please wait for a few seconds before running the next commands

- Using Kubernetes Ingress to expose Services via externally reachable URLs

- Using Kubernetes Ingress to load balance traffic

- Using Kubernetes Ingress to terminate SSL / TLS

- Using Kubernetes Ingress to route HTTP traffic to multiple hostnames at the same IP address

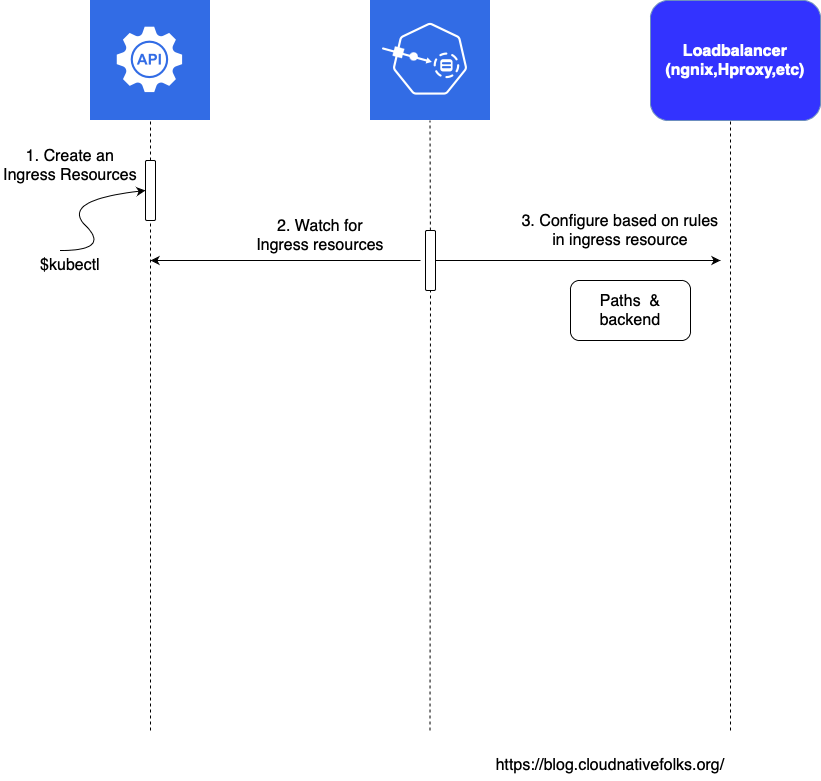

- The Sequence of events followed by to create an ingress resource

Why Use Ingress Objects?

Applications that are not accessible to users are useless. Kubernetes Services provide accessibility with a usability cost. Each application can be reached through a different port. We cannot expect users to know the port of each service in our cluster.

Ingress objects manage external access to the applications running inside a Kubernetes cluster.

While, at first glance, it might seem that we already accomplished that through Kubernetes Services, they do not make the applications truly accessible. We still need forwarding rules based on paths and domains, SSL termination and a number of other features.

In a more traditional setup, we’d probably use an external proxy and a load balancer. Ingress provides an API that allows us to accomplish these things, in addition to a few other features we expect from a dynamic cluster.

Why Services Are Not the Best Fit for External Access?

Only services won’t suffice

We cannot explore solutions before we know what the problems are. Therefore, we’ll re-create a few objects using the knowledge we already gained. That will let us see whether Kubernetes Services satisfy all the needs users of our applications might have. Or, to be more explicit, we’ll explore which features we’re missing when making our applications accessible to users.

We already discussed that it is a bad practice to publish fixed ports through Services. That method is likely to result in conflicts or, at the very least, create the additional burden of carefully keeping track of which port belongs to which Service. We already discarded that option before, and we won’t change our minds now.

Why ingress controllers are required?

We need a mechanism that will accept requests on pre-defined ports (e.g., 80 and 443) and forward them to Kubernetes Services. It should be able to distinguish requests based on paths and domains as well as to be able to perform SSL offloading.

Kubernetes itself does not have a ready-to-go solution for this. Unlike other types of Controllers that are typically part of the kube-controller-manager binary, Ingress Controller needs to be installed separately. Instead of a Controller, kube-controller-manager offers Ingress resource that other third-party solutions can utilize to provide requests forwarding and SSL features. In other words, Kubernetes only provides an API, and we need to set up a Controller that will use it.

Fortunately, the community already built a myriad of Ingress Controllers. We won’t evaluate all of the available options since that would require a lot of space, and it would mostly depend on your needs and your hosting vendor.

enabling ingress on your k8s cluster

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.3.0/deploy/static/provider/cloud/deploy.yaml

get pods

kubectl get pods --namespace=ingress-nginx

verify ingress add-on enable pr may

kubectl get pods -n ingress-nginx \

| grep ingress

by default the ingress controller is configured with only two endpoints

nohup kubectl port-forward -n ingress-nginx service/ingress-nginx-controller 3000:80 --address 0.0.0.0 > /dev/null 2>&1 &

Please wait for a few seconds before running the next commands

curl -i "0.0.0.0:3000/healthz"

curl -i "0.0.0.0:3000/something"

output

HTTP/1.1 200 OK

Server: nginx/1.15.9

Date: Mon, 10 Jun 2022 12:02:11 GMT

Content-Type: text/html

Content-Length: 0

Connection: keep-alive

Using Kubernetes Ingress to expose Services via externally reachable URLs

Now that an Ingress Controller has been set up, we can start using Ingress Resources which are another resource type defined by Kubernetes. Ingress Resources are used to update the configuration within the Ingress Controller. Using Nginx as an example, there will be a set of pods running an Nginx instance that is monitoring Ingress Resources for changes so that it can update and then reload the configuration.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx

rules:

- host: test.example.com

http:

paths:

- path: /hello-world

pathType: Prefix

backend:

service:

name: hello-world

port:

number: 8080

basic Ingress Resource. Within the metadata we are selecting which ingress class we want to use, in our case ingress-nginx, which will let the Nginx Ingress controller know that it needs to monitor and make updates. The ingress.class option is also used to specify which Ingress Controller should monitor the resource if we are using multiple controllers.

The spec defines what we expect to be configured. Within this section we are going to define a rule, or set of rules, the host the rules will be applied to, whether or not it is http or https traffic as well as the path we are watching, and the internal service and port where the traffic will be sent.

Traffic that is sent to test.example.com/hello-world will be directed to the service hello-world on port 8080. This is a basic setup that provides layer 7 functionality. Now we can use a single DNS entry for a host, or set of hosts, and provide path based load balancing. Next we will expand on this by adding another path for routing traffic.

Using Kubernetes Ingress to load balance traffic

Now we can add another path. This allows us to load balance between different backends for our application. We can split up traffic and send it to different service endpoints and deployments based on the path. This can be beneficial for endpoints that receive more traffic, as we can scale a single deployment for /hello-world without having to scale up /hello-universe.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world-and-universe

annotations:

nginx.ingress.kubernetes.io/rewrite-target:

spec:

ingressClassName: nginx

rules:

- host: test.example.com

http:

paths:

- path: /hello-world

pathType: Prefix

backend:

service:

name: hello-world

port:

number: 8080

- path: /hello-universe

pathType: Prefix

backend:

service:

name: hello-universe

port:

number: 8080

Using Kubernetes Ingress to terminate SSL / TLS

Setting up services using HTTP is great but what we really need is HTTPS. To do this we need to install a Kubernetes Custom Resource Definition called Cert-Manager. Cert-Manager automates TLS certificate management using multiple providers.

artifacthub.io/packages/helm/cert-manager/c..

Once we have installed Cert-Manager we can create certificates with annotations in the Ingress Resource, or manually by creating our own Certificates. In our example we are going to use annotations as it simplifies the configuration.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

cert-manager.io/cluster-issuer: letsencrypt-prod

spec:

ingressClassName: nginx

tls:

- hosts:

- test.example.com

secretName: test-example-tls

rules:

- host: test.example.com

http:

paths:

- path: /hello-world

pathType: Prefix

backend:

service:

name: hello-world

port:

number: 8080

This configuration takes advantage of a couple annotations. Nginx provides options that can be configured using annotations. We are using force-ssl-redirect to redirect HTTP traffic to HTTPS. The Cert-Manager annotation cluster-issuer lets us select which issuer we want to use for our certificate. In the hosts section we are specifying the host we want to route traffic for, a subdomain of example.com called test. A secret name is selected to store the certificate information which is automatically configured since we are using a Cert-Manager annotation.

Using Kubernetes Ingress to route HTTP traffic to multiple hostnames at the same IP address

Multiple DNS records can be pointed at the same public IP address used for our LoadBalancer service. We can use a different ingress resource per host, which allows us to control traffic with multiple hostnames, while using the same external IP for A Record creation.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world-prod

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx

rules:

- host: test.example.com

http:

paths:

- path: /hello-world

pathType: Prefix

backend:

service:

name: hello-world-test

port:

number: 8080

- host: prod.example.com

http:

paths:

- path: /hello-world

pathType: Prefix

backend:

service:

name: hello-world-prod

port:

number: 8080

we have added a prod version of hello-world. We are using the hostname prod.example.com and are pointing traffic to a new service called hello-world-prod. Traffic is going to come in through the same Load Balancer IP address and will be routed based on the hostname used and the path.

The Sequence of events followed by to create an ingress resource